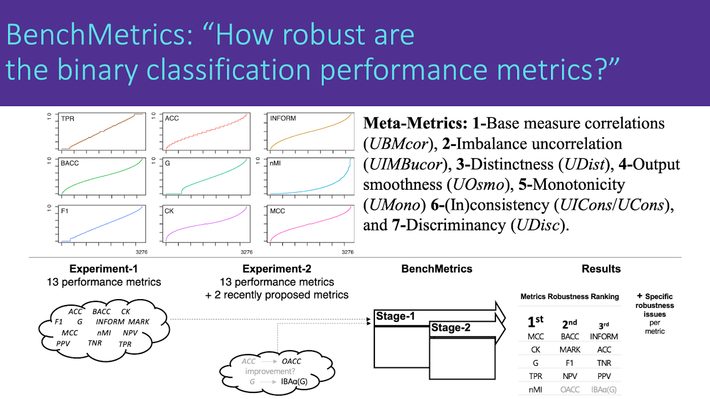

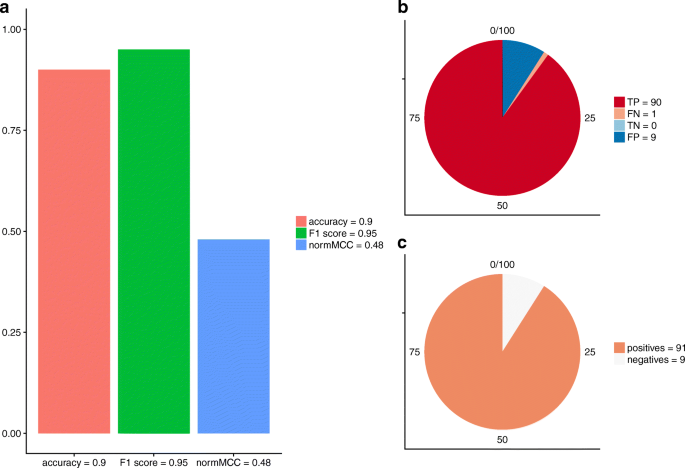

The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation | BMC Genomics | Full Text

PLOS ONE: MLViS: A Web Tool for Machine Learning-Based Virtual Screening in Early-Phase of Drug Discovery and Development

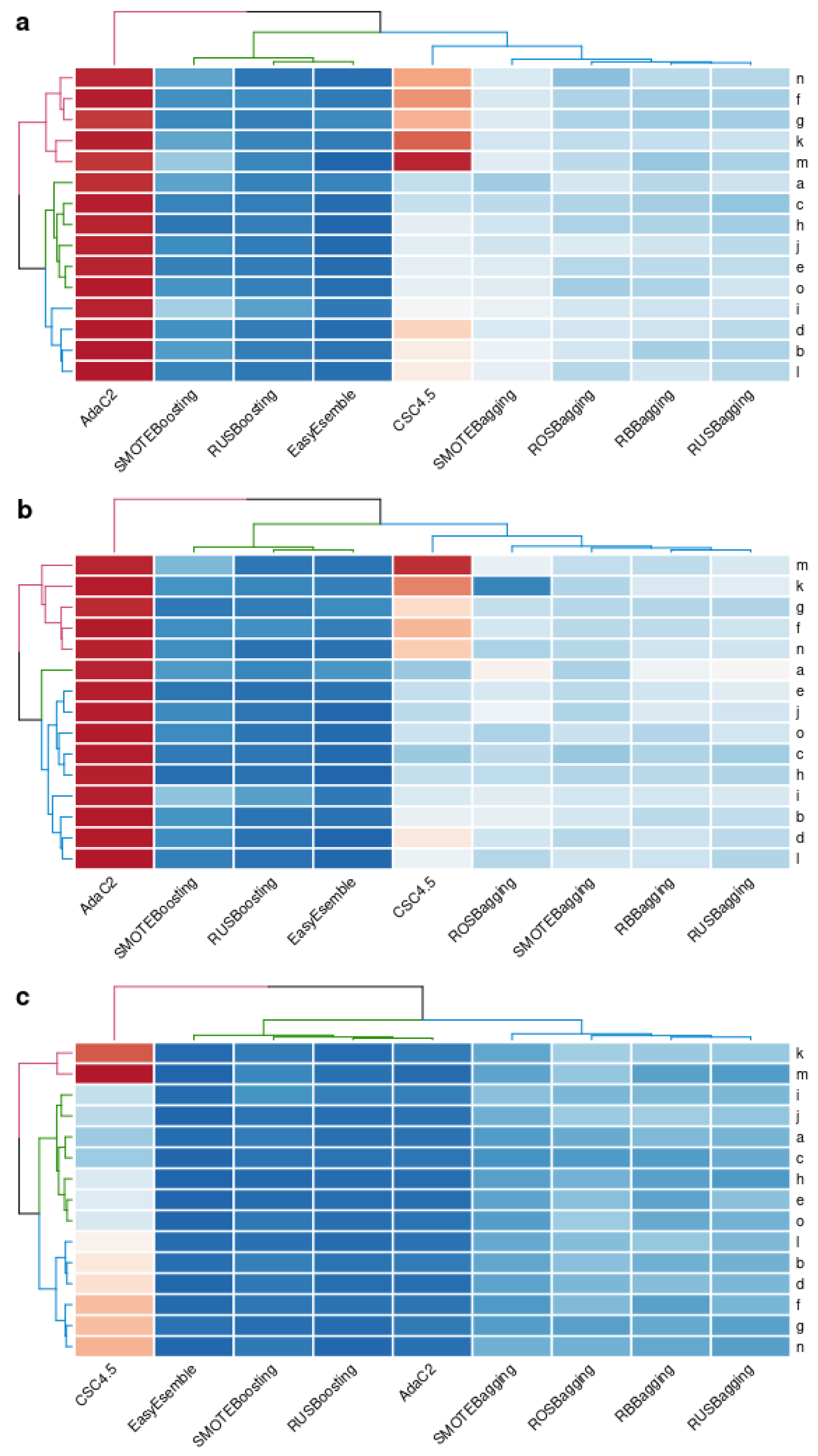

BDCC | Free Full-Text | Exploring Ensemble-Based Class Imbalance Learners for Intrusion Detection in Industrial Control Networks | HTML